Web VR with the Oculus DK1

Jack Taylor

Jack Taylor

In my previous blog post, I covered the usage of older VR technology within development environments. This demonstrated the installation of the Oculus DK1 on Windows 10, as well as the it’s use with Unity 2017. As a follow up, this blog post will extend the uses of the Oculus DK1 within a Web VR development environment using AFrame and HTML. If you have not read the first blog post, please do so to follow the instructions on how to install and use your old Oculus headset on Windows 10. You can find that here: https://computingnorthampton.blogspot.com/2018/05/vr-on-cheap.html

PLEASE NOTE: When creating this project, I had to use Mozilla Firefox as other browsers lack support for certain platforms when using WebVR.

You will also need to have SteamVR running when testing WebVR scenes, else this will not work! If you have not yet read my other blog post, please do so to ensure that you have covered the installation of your Oculus DK1 headset!

You can view the AFrame compatibility chart here: https://aframe.io/docs/0.8.0/introduction/vr-headsets-and-webvr-browsers.html#what_is_virtual_reality

To start your development, you will first need an IDE. Any IDE is suitable for this process. I would recommend Sublime Text or Notepad++. Please ensure that your VR device is connected to your machine before starting this process.

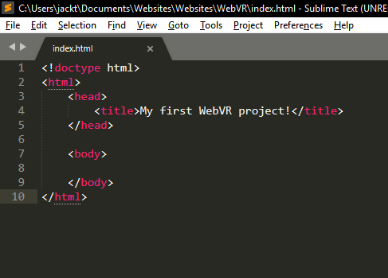

To begin, you will first need to create an index file for your WebVR project. I recommend starting a nice empty folder, so you have a clean work environment. When creating the file, please make sure that you save it as a HTML file before you continue.

When you’ve created the file, you will need to start with a layout like mine. However, feel free to customise it as you wish.

Once you have saved the file and filled it with the contents as shown above, you will be able to start developing a web page!

Now, for WebVR to work as we would like it to, we need to import some global scripts which are provided by AFrame within the head tags of your file. You can find the script on the AFrame documentation here: https://aframe.io/docs/0.8.0/introduction/. Once you have inserted this script, your web page will support the AFrame API, which you can use to create your scenes.

To create a scene for your web page, you will need to add a couple of tags to between your and tags. The tags you will need to insert will create a scene for you, so you can start putting objects in to your scene. These tags are and .

Once completed, your file should look like this:

Now we have our scene set up, let’s start adding some surroundings to our scene.

For this example, I will be using some assets provided by my University. If you would like to explore the models available for AFrame scenes, you can view them on the AFrame documents here: https://aframe.io/docs/0.8.0/introduction/html-and-primitives.html.

Luckily with AFrame, you can specify different styles and sizes for the objects you add to your scene. For this example, I will be using , , , and elements. I will continue to post images of my example as we progress, so you can use them as a reference when creating a project, should you wish to do so.

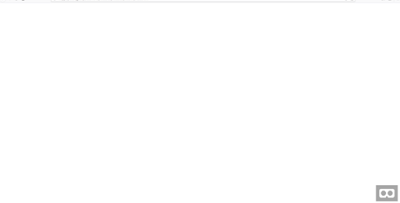

Before I demonstrate the implementation of assets within our scene, I would recommend running your new file in a browser to make sure that everything is working as intended.

Your scene will be blank, and will look like this:

Notice the headset icon in the bottom right of your scene. That will trigger your VR headset and set your browser to run in Full Screen.

Okay, let’s start populating our scene by adding a few elements. First, we will start with a Sky which will brighten up our project a bit. For this example, here is the code I will be using:

Feel free to change the colour around to suit your project needs. Once added, save and run your file again to view your changes. You can do this as many times as you like.

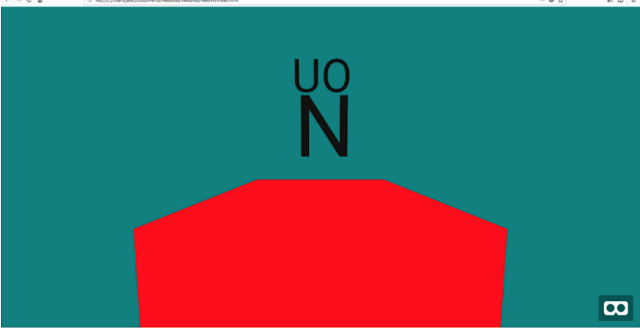

Once saved, your project will look like this:

You can continue to add more elements to your scene as you would like. AFrame allows you to specify different properties for your objects, such as the position, colour, size, and many more.

Let’s add a few elements to this scene to populate it a bit.

Here is the code for the elements you should add next as part of this example:

As you can see, the four new lines above have new properties, which will contribute to the way these objects behave in your scene. A full list of the API properties can be found on the documentation (https://aframe.io/docs/0.8.0/components/background.html). Save your file and run it within your browser.

Your scene should now look like this:

Feel free to try this out using your Virtual Reality headset.

-->

That just about covers the basics of using WebVR with your Oculus DK1 headset. I hope this article was helpful! Please feel free to suggest any changes to this post if you think that something is missing. Enjoy your experiments!

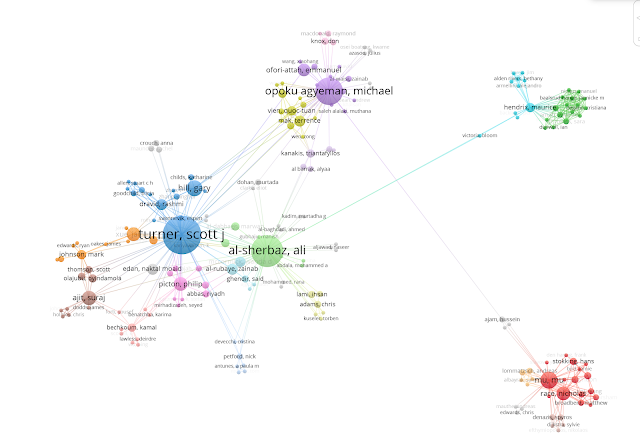

All views and opinions are the author's and do not necessarily reflected those of any organisation they are associated with. Twitter: @scottturneruon